Understanding Data Bias: When Numbers Aren’t Neutral

- Aslam Latheef

- May 7, 2025

- 3 min read

In an era where data-driven decisions shape our healthcare, finance, hiring, and law enforcement systems, it’s crucial to recognize one uncomfortable truth: data isn't always neutral. Hidden bias can distort results, reinforce discrimination, and lead to unfair outcomes. This article breaks down 15 essential topics to help you understand, identify, and address data bias.

1. What Is Data Bias?

Data bias occurs when a dataset unfairly favors or disfavors certain groups or outcomes. It's often unintentional but can lead to misleading insights and harmful decisions.

2. Why Bias in Data Matters

Biased data can result in unfair hiring algorithms, faulty credit scores, discriminatory healthcare practices, and more. It can directly affect people’s lives and trust in technology.

3. Historical Roots of Data Bias

Many datasets are built on historically biased systems—like criminal records or medical trials—that have excluded or misrepresented certain populations.

4. Types of Data Bias

Sampling bias: Non-representative datasets

Labeling bias: Subjective or inconsistent annotations

Measurement bias: Faulty data collection tools

Observer bias: Personal prejudices of those collecting or labeling the data

5. Bias vs. Variance: Know the Difference

While "bias" in machine learning refers to simplifying assumptions in algorithms, data bias refers to skewed or unfair input data. Understanding both is crucial.

6. How Biased Data Affects Machine Learning

AI models trained on biased data can replicate and even amplify that bias. For example, an algorithm trained on male-dominated job data might rate women lower for the same role.

7. Real-World Examples of Data Bias

Facial recognition tech misidentifying people of color

Credit scoring systems unfairly penalizing low-income groups

Predictive policing targeting certain neighborhoods disproportionately

8. Data Collection: The First Line of Defense

Bias often begins at data collection. Lack of diversity in samples or flawed survey questions can skew results from the start.

9. Human Bias in Data Annotation

When humans label training data, their assumptions and prejudices can unintentionally affect outcomes—especially in sentiment analysis, image labeling, and medical diagnosis datasets.

10. Algorithmic Bias vs. Data Bias

Algorithmic bias stems from the design or training process of a model, while data bias originates in the raw inputs. Often, both compound each other.

11. The Role of Data Governance

Organizations must create standards and oversight for how data is collected, used, and evaluated to minimize bias and improve accountability.

12. Auditing Your Data for Bias

Use tools and techniques such as:

Disaggregated analysis by demographics

Fairness metrics like disparate impact

Bias detection software (e.g., AI Fairness 360, Fairlearn)

13. Legal and Ethical Implications

Laws like GDPR and AI Act (EU) are starting to enforce transparency and fairness in automated decision-making. Organizations ignoring bias may face legal action and reputational harm.

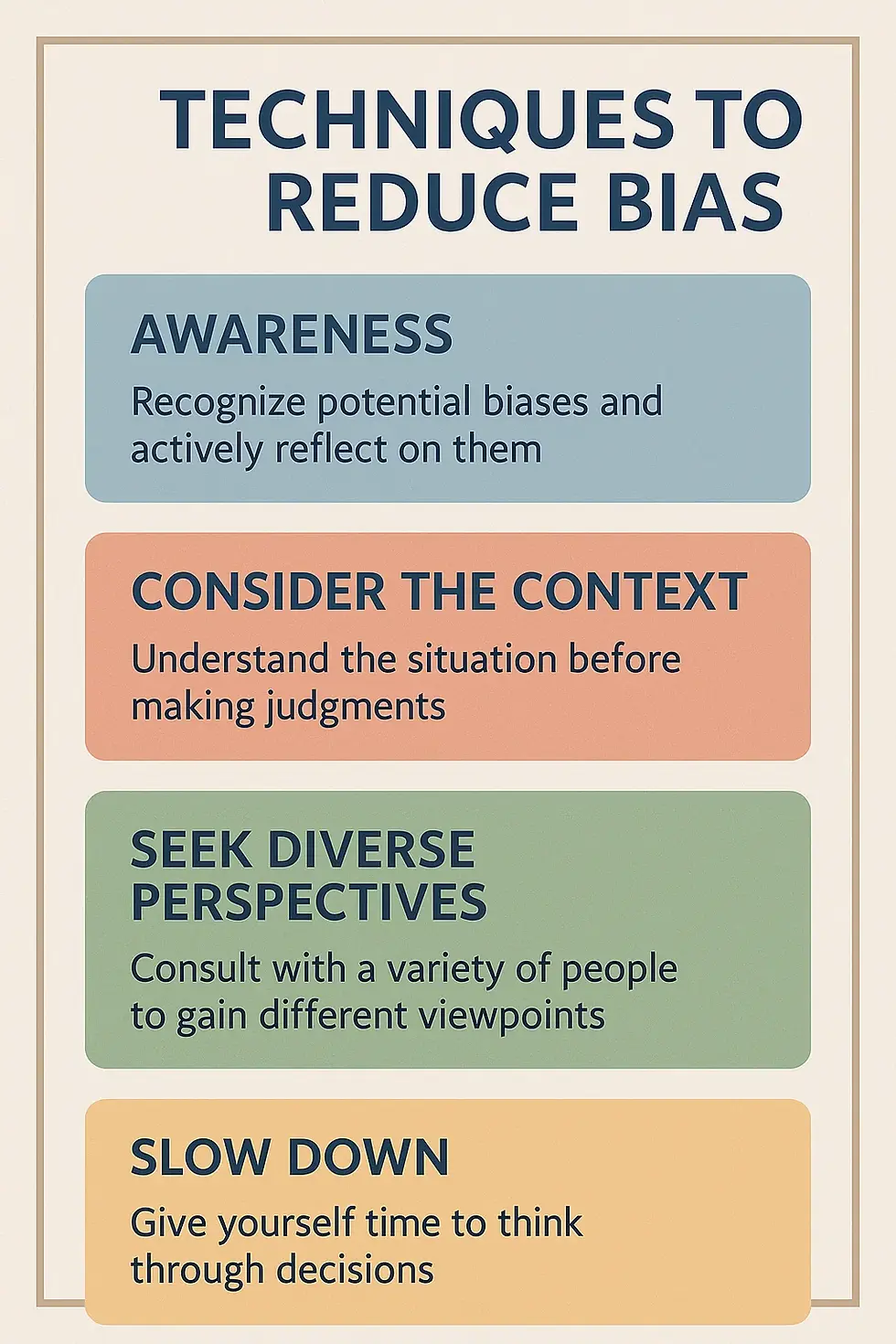

14. Techniques to Reduce Bias

Data balancing (e.g., oversampling underrepresented groups)

Algorithm tuning for fairness constraints

Transparent model documentation (like model cards and datasheets for datasets)

15. Building an Ethical Data Culture

Combatting data bias isn’t just technical—it’s cultural. Companies and institutions must prioritize diversity, ethics, and inclusion in their data practices.

Conclusion

Data can drive innovation, but it must be used responsibly. By understanding the sources and consequences of data bias, we can create smarter, fairer, and more equitable systems. Bias isn't always easy to see—but once you know what to look for, you’re one step closer to fixing it.

Would you like this turned into a presentation, workshop guide, or infographic?

4o

Comments